tar: .: implausibly old time stamp 1970-01-01 12:00:00

Hull's play-off hopes dented by Watford draw

3 days ago

Thoughts on Java, Groovy and Agile Practices

tar: .: implausibly old time stamp 1970-01-01 12:00:00

<java jar="lib/schemaSpy_4.0.0.jar" fork="true"/>

<arg line="-t udbt4"/>

<arg line="-host ${db.host}"/>

<arg line="-port ${db.port}"/>

<arg line="-db ${database}"/>

<arg line="-u ${db.userid}"/>

<arg line="-p ${db.password}"/>

<arg line="-all"/>

<arg line="-schemaSpec (PMH)|(SHO)"/>

<arg line="-dp ${DB2_LIB}/db2jcc.jar:${DB2_LIB}/db2jcc_license_cu.jar"/>

<arg line="-o ${reports.data.model.dir}"/>

</java>

The last graph I posted didn't really do the Crap4J Hudson plugin justice, especially since Daniel Lindner fixed the bug where %age figures disappeared when Crappyness was < 1%.

The last graph I posted didn't really do the Crap4J Hudson plugin justice, especially since Daniel Lindner fixed the bug where %age figures disappeared when Crappyness was < 1%.

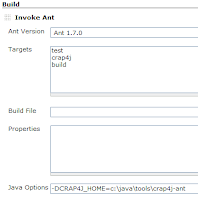

set ANT_OPTS="-DCRAP4J_HOME=c:\java\tools\crap4j-ant", where c:\java\tools\crap4j-ant is the location of your Crap4J ant tasks.

-DCRAP4J_HOME=c:\java\tools\crap4j-ant, where c:\java\tools\crap4j-ant is the location of your Crap4J ant tasks.

the output from your Crap4J ant task.

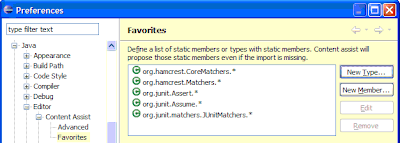

the output from your Crap4J ant task. Adding the static types shown here to your Eclipse Favorites will make your JUnit 4.4 journey a lot smoother.

Adding the static types shown here to your Eclipse Favorites will make your JUnit 4.4 journey a lot smoother.assertThat(e.getMessage(), both(startsWith("Invalid environment")).and(containsString(environmentName));as opposed to the more common:

assertThat(e.getMessage(), allOf(startsWith("Invalid environment"), containsString(environmentName)));It's debatable which is cleaner. The top statement reads closer to the English language, but is longer, more complex to construct and can't have additional matchers added in the same way that allOf can.